Self Hosting AI Stack using vSphere, Docker and NVIDIA GPU

Artificial intelligence is all the rage at the moment, It’s getting included in every product announcement from pretty much every vendor under the sun. Nvidia’s stock price has gone to the moon. So I thought I better get some knowledge and understand some of this.

As it’s a huge field and I wasn’t exactly sure where to start I decided to follow Tim’s excellent video and guide as to what he has deployed. This blog is a bit more of a reminder for me as what I have done. I wont go into all the details as Tim has done a better job than I will.

Table of Contents

Introduction

Yes, you can do some AI things with a CPU but the reality at the moment is that it’s better suited to being executed on a GPU and depending on what you’re doing a GPU(s) with lots of memory are what you need. I didn’t fancy spending any money so I thought I would start with my Nvidia P4 and see where I get to.

Nvidia Tesla P4 Specs

The Nvidia P4 has 8GB of DDR5 memory which is enough for running some smaller models

OS Choice

I chose to use one of my vSphere Hosts with a VM running Ubuntu 24.04 VM

vHardware Spec

In vSphere, I allocated 8x vCPUs, 20GB of memory, and 256GB of storage to the VM with the P4 passed in directly. This means I didn’t need any additional NVIDIA licences.

Install Ubuntu 24.04 ( or use your template) & make sure it’s patched

sudo apt-get update && sudo apt-get upgradeInstall NVIDIA Software

The first step is to install the Nvidia drivers.

sudo ubuntu-drivers install

reboot and then use “sudo nvidia-smi” to validate that the card is now functioning within the VM. The output below shows that the Ubuntu server can see the Tesla P4

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 470.256.02 Driver Version: 470.256.02 CUDA Version: 11.4 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla P4 Off | 00000000:0B:00.0 Off | 0 |

| N/A 46C P0 23W / 75W | 0MiB / 7611MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+Install Docker

The next step is to install docker as the AI engines will all be running within docker.

# Add Docker's official GPG key:

sudo apt-get update

sudo apt-get install ca-certificates curl gnupg

sudo install -m 0755 -d /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

sudo chmod a+r /etc/apt/keyrings/docker.gpg

# Add the repository to Apt sources:

echo \

"deb [arch="$(dpkg --print-architecture)" signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \

"$(. /etc/os-release && echo "$VERSION_CODENAME")" stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get updatesudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-pluginUse Docker without SUDO

sudo usermod -aG docker $USERNVIDIA Container Toolkit

The next thing to do is to install the Nvidia container toolkit. This allows containers to interact with the graphics card

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \

&& curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \

sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \

sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.listsudo apt-get update

sudo apt-get install -y nvidia-container-toolkitsudo nvidia-ctk runtime configure --runtime=docker

sudo systemctl restart dockerTest NVIDIA Docker

sudo docker run --rm --runtime=nvidia --gpus all ubuntu nvidia-smiIf the above worked, you have a functioning system where docker can communicate with your GPU.

Overview

Tim’s blog has a decent overview of the containers and what they are doing so I won’t detail it here.

Chat in Action

So the next obvious thing I needed to do was ask it some questions. So I asked the gemma2 model the question “What AI models can I run on a Nvidia p4?”

and it answered….

You’re right to be specific! While the Nvidia P4 is technically an AI-capable GPU, it’s designed more for inference (running pre-trained models) than training complex ones from scratch. Think of it like a powerful calculator for AI, not a research lab.

Here’s a breakdown of what you can realistically expect on an Nvidia P4:

1. Lightweight Models are Your Friend:

- Object Detection: Tiny YOLOv3 variants or lightweight MobileNet-based detectors. Expect lower accuracy than bigger models but fast inference speeds for real-time applications.

- Image Classification: MobileNet V2, Inception v3 (downscaled), SqueezeNet – these are pre-trained and optimized for mobile devices.

2. Frameworks Matter:

- TensorRT : This is Nvidia’s specialized engine for P4. It optimizes your models for performance, even converting them into a more efficient format.

- ONNX Runtime : This open-source runtime supports various AI frameworks, and can be used to run models on the P4 after conversion.

3. Practical Applications:

- Edge Devices: Think smart cameras, embedded systems, or robotics where low power consumption and quick responses are crucial.

- Demo Projects: Great for learning about deployment and seeing how AI works in real-time, even if the accuracy isn’t state-of-the-art.

What You CAN’T Do:

- Train massive neural networks from scratch (think GPT-3 or large image generators). The P4 lacks the memory and compute power.

- Run cutting-edge research models that demand immense resources. Those are best left to specialized cloud GPUs or supercomputers.

Let me know if you have a specific AI task in mind – I might be able to suggest suitable pre-trained models or optimizations for your Nvidia P4 setup!

As a response, I was certainly impressed with it

Power Consumption

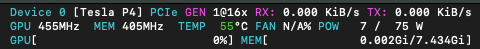

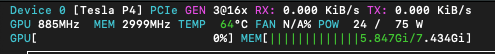

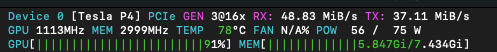

Obviously, I am very aware of the power usage on my lab so I thought it was interesting to dig into how this much running chat queries may impact things. According to NVTOP The P4 normally idles around 7 Watts, however, once a model is loaded even if its not running any queries this increases to around 24W this is probably due to the increased memory clock speed.

When queries are running it will typically use 50-60 watts.

Summary

Obviously it’s early days for my experimentation into what is a large and rapidly changing field. But it’s certainly something I will be playing with a lot.