How I Migrated from Pocket to Hoarder with AI Integration

Update: Hoarder has now been renamed to Karakeep due to a trademark issue

I’ve been on a mission recently to regain control of my data. I haven’t yet faced the humongous task of moving my main email from Gmail, but I have had some successes with other cloud services and a win is a win….

One of them is my bookmark manager. Up until now, I used Pocket since way back in the day when it was originally called “Read it Later”. I wanted to bring it away from a cloud service and host it locally. I also had the opportunity to add a little AI magic to go with it.

Table of Contents

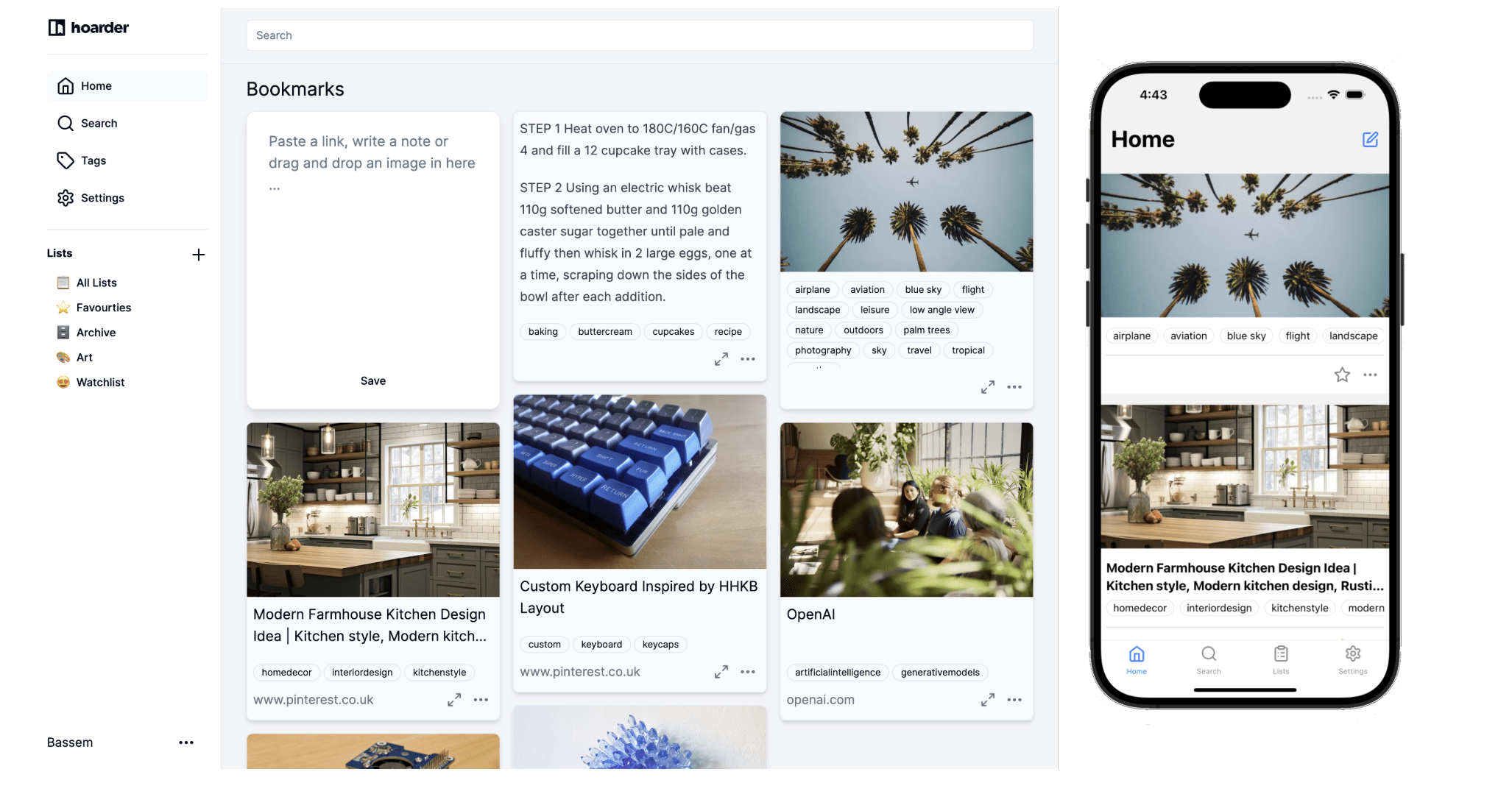

What is Hoarder?

Taken from the Hoarder website, it’s an App that can do all of this….

- 🔗 Bookmark links, take simple notes and store images and pdfs.

- ⬇️ Automatic fetching for link titles, descriptions and images.

- 📋 Sort your bookmarks into lists.

- 🔎 Full text search of all the content stored.

- ✨ AI-based (aka chatgpt) automatic tagging. With support for local models using Ollama!

- 🎆 OCR for extracting text from images.

- 🔖 Chrome plugin and Firefox addon for quick bookmarking.

- 📱 An iOS app, and an Android app.

- 📰 Auto hoarding from RSS feeds.

- 🔌 REST API.

- 🌐 Mutli-language support.

- 🖍️ Mark and store highlights from your hoarded content.

- 🗄️ Full page archival (using monolith) to protect against link rot. Auto video archiving using youtube-dl.

- ☑️ Bulk actions support.

- 🔐 SSO support.

- 🌙 Dark mode support.

- 💾 Self-hosting first.

The main use for me is having a website and mobile app for saving pages useful to me that I found when I’m usually down a tech rabbit hole.

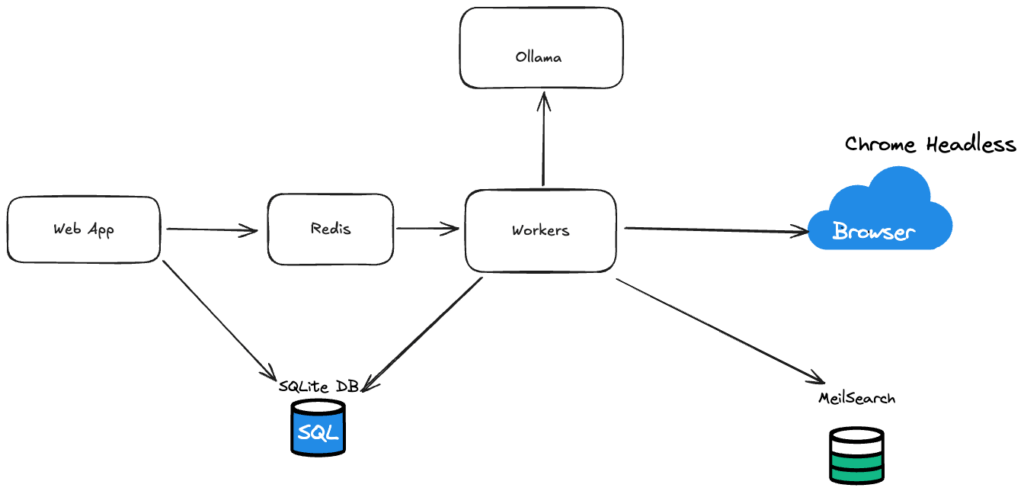

Hoarder Architecture

I have chosen to run this within my home infrastructure and connected it to my existing Ollama setup This means that Hoarder can call Ollama for AI text/image clarification using the setup and models I have already created.

The deployment is all within Docker and I have added extracts from my Docker Compose files below.

Hoarder Install

Snippet from my docker-compose.yml

Hoarder

hoarder:

image: ghcr.io/hoarder-app/hoarder:${HOARDER_VERSION:-release}

restart: unless-stopped

networks:

- traefik

volumes:

- ./data:/data

env_file:

- .env

environment:

MEILI_ADDR: http://meilisearch:7700

BROWSER_WEB_URL: http://chrome:9222

DATA_DIR: /data

labels:

- "com.example.description=hoarder"

- "traefik.enable=true"

- "traefik.http.routers.hoarder.rule=Host(`hoarder.jameskilby.cloud`)"

- "traefik.http.routers.hoarder.entrypoints=https"

- "traefik.http.routers.hoarder.tls=true"

- "traefik.http.routers.hoarder.tls.certresolver=cloudflare"

- "traefik.http.services.hoarder.loadbalancer.server.port=3000"

chrome:

image: gcr.io/zenika-hub/alpine-chrome:123

restart: unless-stopped

networks:

- traefik

command:

- --no-sandbox

- --disable-gpu

- --disable-dev-shm-usage

- --remote-debugging-address=0.0.0.0

- --remote-debugging-port=9222

- --hide-scrollbars

meilisearch:

image: getmeili/meilisearch:v1.11.1

restart: unless-stopped

networks:

- traefik

env_file:

- .env

environment:

MEILI_NO_ANALYTICS: "true"

volumes:

- ./meilisearch:/meili_data.env snippet file

OLLAMA_BASE_URL=http://ollama:11434

INFERENCE_TEXT_MODEL=llama3.1:8b

INFERENCE_IMAGE_MODEL=llava

Export from Pocket

Luckily, Pocket has an export function that will dump all of your saved URL’s and tags into a single file. This can be run by navigating to https://getpocket.com/export when logged in.

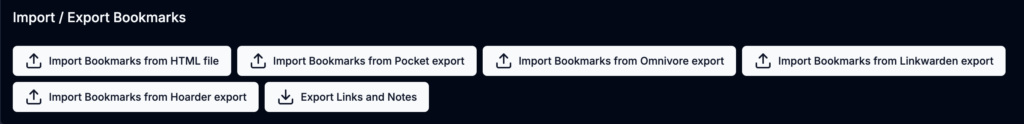

Import to Hoarder

Once you have this file, you can input it straight into Hoarder. This is done by navigating to the user settings section and then selecting import/export.

Hoarder supports several file formats from other tools

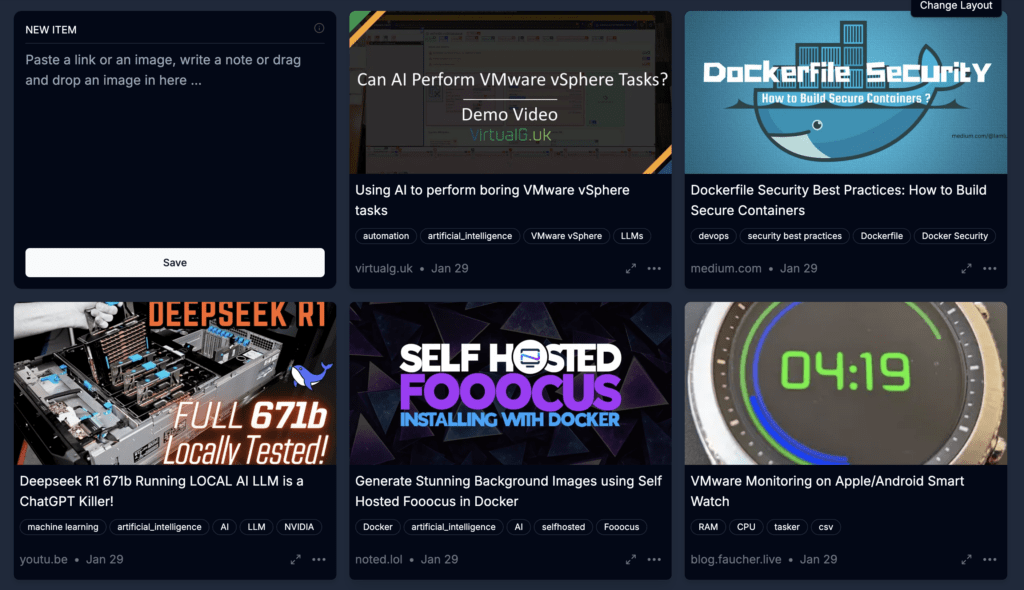

Hoarder In Action

When the URLs are loaded. Hoarder passes the URL’s into a headless Chrome to gather the data from that page. It then index’s the contents and then passes the contents to Ollama to apply appropriate tags.

AI Prompt

You can tweak the AI prompt that is sent over to Ollama. In my case I have just used the default as it looked like a good starting point. The prompt is

You are a bot in a read-it-later app and your responsibility is to help with automatic tagging.

Please analyze the text between the sentences "CONTENT START HERE" and "CONTENT END HERE" and suggest relevant tags that describe its key themes, topics, and main ideas. The rules are:

- Aim for a variety of tags, including broad categories, specific keywords, and potential sub-genres.

- The tags language must be in english.

- If it's a famous website you may also include a tag for the website. If the tag is not generic enough, don't include it.

- The content can include text for cookie consent and privacy policy, ignore those while tagging.

- Aim for 3-5 tags.

- If there are no good tags, leave the array empty.

CONTENT START HERE

<CONTENT_HERE>

CONTENT END HERE

You must respond in JSON with the key "tags" and the value is an array of string tags.The process of gathering all the web pages, indexing them and analysing with AI took around an hour with my setup. I believe that Hoarder has some internal throttles to try and avoid tripping anti-bot tools.

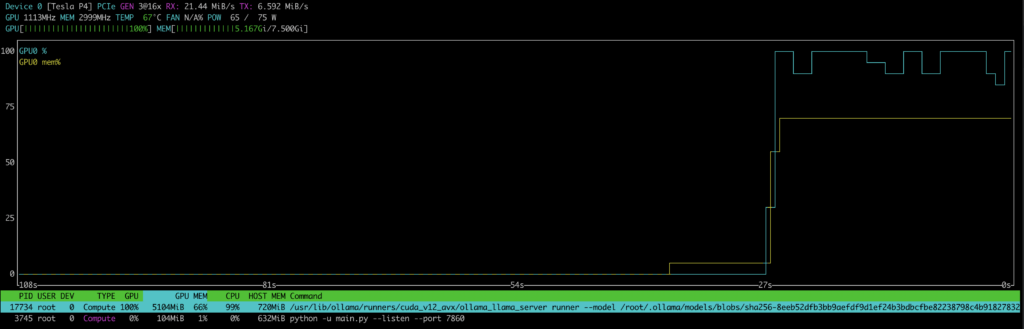

You can monitor this process in the admin section. I was also keen to see the stats on my graphics card while this was running so I ran NVTOP on the VM while some AI processing was running.

Finished Result

At the end of the process, I ended up with a little over 750 bookmarks imported and fully indexed and displayed in a very appealing nature.

Post Install Tasks

Tag Merge

The AI process is mightily impressive but one of the issues that can occur is it generate similar tags. Hoarder has the ability to allow you to merge them.

This is done in the cleanups section under your username. In my case it suggested 150 tags that may need merging. At the moment I am glad this has manual oversight as some should definitely be merged like “Motherboard” and “Motherboards” but some are totally different like “NAS” and “NASA”

Broken Links

I was quite surprised by how many of the links I had were broken links. These were for a few reasons, ranging from company takeovers. To sites being dead. Sadly, in one case the author is no longer with us. In a few cases, the broken link was because the URL I had saved led to a URL shortener that no longer exists. I need to revisit the remaining links in the list and ensure that they point to the end state.