My lab is constantly changing. I will try to keep this page up to date with the tech I’m running. My setup is spread across the local stuff running in my “server room” and the cloud, a Mix of AWS and Cloudflare.

Table of Contents

Obviously, with homelab’s everyone has different requirements and also opinions. My lab has grown organically over the last 10 years. Some kit has been purchased new, a lot has been bought off eBay or secondhand, and some has been donated. If I were starting from scratch it would look quite different I think. I suspect the replacement cost of what I’m running currently would be in the £15-20k mark

I have several requirements some of them are hard requirements and others I try to meet if possible.

- Must be able to run vSphere (HCL preferred)

- IPMI/Remote management preferred

- Rack Mount Preferred

- Ability to achieve a low power configuration for 24×7 Operations

- Noise isn’t really a factor due to location

- Heat Output isn’t a huge factor

- GPU is an essential in at least one node.

Physical Hardware

Overview

Most of the serious hardware lives in a StarTech 25U Openframe Server Rack contained in the “server room”

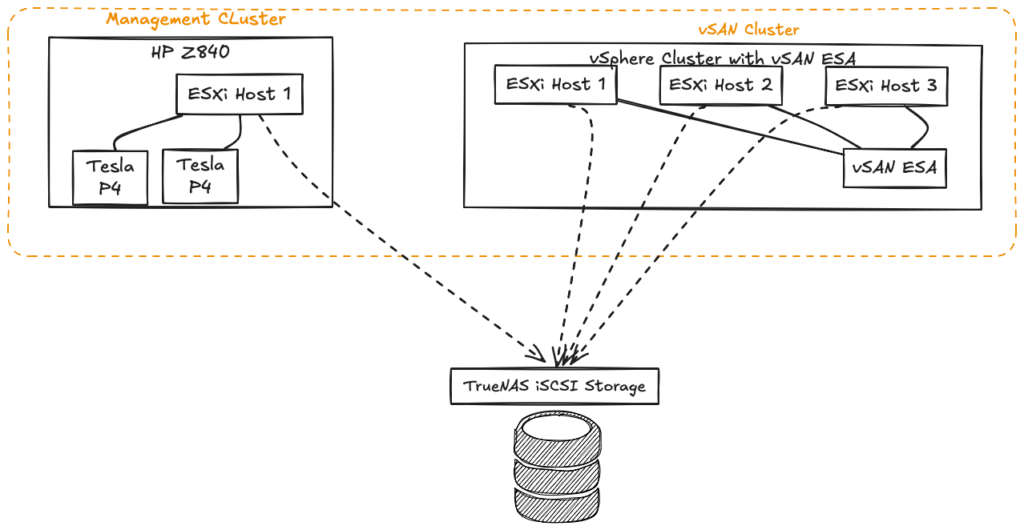

GPU/ Management Cluster

This is made up of a single HP Z840. It is an amazing workhorse and has been a key part of my lab (running multiple functions for over 4 years) It currently runs vSphere and I often use it for running Holodeck on top of vSphere.

| Component | Description |

|---|---|

| HP | Z840 |

| CPU | 2x CPU E5-2673 v3 @ 2.40GHz 30MB Cache, 5.0GTs, 105W |

| RAM | 256GB |

| NIC 0 | Supermicro Intel AOC-STGN-i2S 10Gb Dual Port |

| Storage 0 | Intel Optane DC P4800X used for Local NVMe Datastore |

| Storage 1 | Intel 2TB NVMe used for Local NVMe Datastore |

| GPU0 | NVIDIA GeForce GT 730 ( The Z840 would not boot without a graphics card installed) |

| GPU1 | NVIDIA Ampere A10 |

| Boot Volume | 2x1TB Raid 1 |

Compute Cluster

Nutanix NX – 3x NX-1365-G4

This is a significant resource for running a lot of my workloads however, due to electric costs, it does not run all the time. It is made up of 3x Nodes in a Supermicro Big-Twin with an identical configuration as below

This is running vSphere 8 with VSAN ESA being used as storage

| Component | Description |

|---|---|

| Model | NXS2U4NL12G400 |

| CPU | 2x XEON E5-2640 V3 |

| RAM | 256GB |

| NIC | Intel Ethernet Controller XXV710 for 25GbE SFP28 |

| Boot Disk | 64 GB SATADOM |

| SSD 1 | Samsung PM863 960GB |

| SSD 2 | Samsung PM863 960GB |

| SSD 3 | Samsung PM863 960GB |

Storage

Primary Storage

Quanta D51PH-1ULH (66.3TiB usable)

Quanta server running TrueNAS scale. This was originally configured with striped mirrors. I have changed this to a RAIDZ2 config, giving me a usable 66.3 TB This is presented as SMB/NFS and iSCSI to multiple environments. More details are here

Secondary Storage

Synology DS1512+ 4x8TB SHR1 ( 21.8TiB Usable)

This is in my office and used for “offsite backups”

| Hostname | Model | NVMe Capacity (TB) | SSD Capacity (TB) | HDD Capacity (TB) |

|---|---|---|---|---|

| HP | HP Z840 | 2.6 | ||

| vSAN ESA | 0 | 7.42 | ||

| Quanta D51PH-1ULH | 0 | 0 | 66.3 | |

| Synology DS1512+ | 21.8 | |||

| Total TB (Usable) | 2.6 | 7.42 | 95.9 |

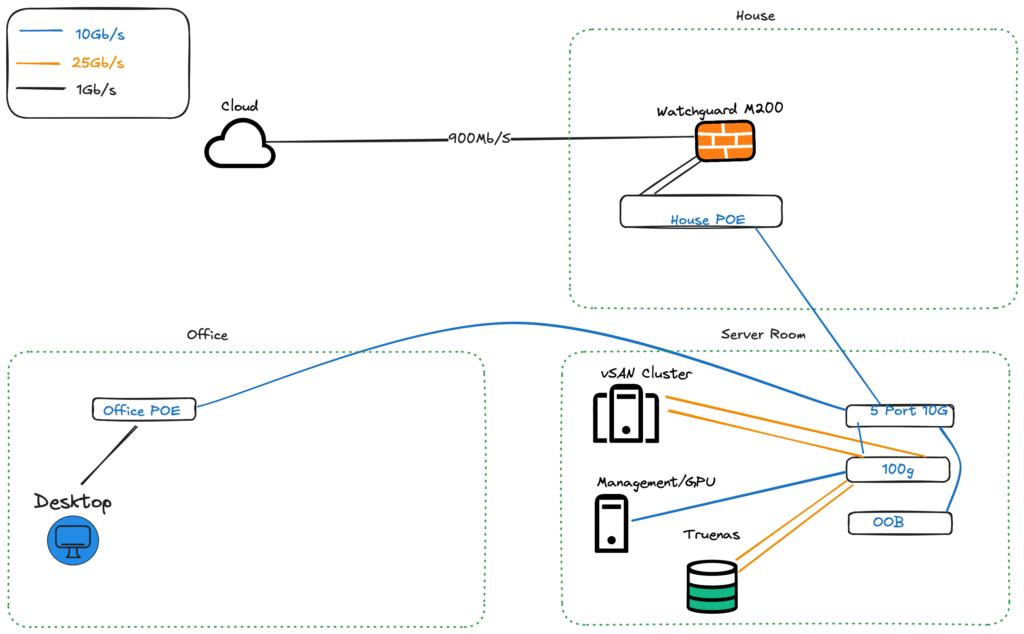

Network

Physical Network

| Model | Description |

|---|---|

| WatchGuard M200 | Core Firewall |

| Mikrotik CRS504-4XQ-IN | Core Fibre Switch |

| Mikrotik CSS610-8P-2S+IN | POE Switch |

| Mikrotik CRS305-1G-4S+ | Fibre to Ethernet Switch |

Wireless Network

| Ap Model | Description |

|---|---|

| Ubiquiti Unifi AC-Pro | Access Point Office |

| Ubiquiti UniFi 6 Pro | Access Point House |

WAN

| Wan Providers | Description |

|---|---|

| Zen FTTP (Via CityFibre) | 900/900 with a /29 IPV4 |